On September 25, NVIDIA will open source Audio2Face models and SDKs, allowing all game and 3D application developers to build and deploy high-precision characters with advanced animations.NVIDIA will open source Audio2Face's training framework, which can be fine-tuned and customized for specific use cases by anyone.

NVIDIA Audio2Face accelerates the creation process of realistic digital characters through generative AI-driven real-time facial animation and mouth shape.Audio2Face uses AI to generate realistic facial animations based on audio input.This technology creates an animation data stream by analyzing acoustic features such as phonemes and intonation, and maps it to the character's facial expressions.These animation data can be used to render preset assets offline, or transmitted to dynamic, AI-powered characters in real time, enabling accurate mouth-shaped synchronization and emotional expression.

Audio2Face model has been widely used in industries such as gaming, media entertainment and customer service.Many independent software manufacturers ISVs and game developers have adopted Audio2Face in their applications.Game developers include Codemasters, GSC Game World, NetEase, and Perfect World.ISVs include Convai, Inworld AI, Realusion, Streamlabs, and UneeQ.

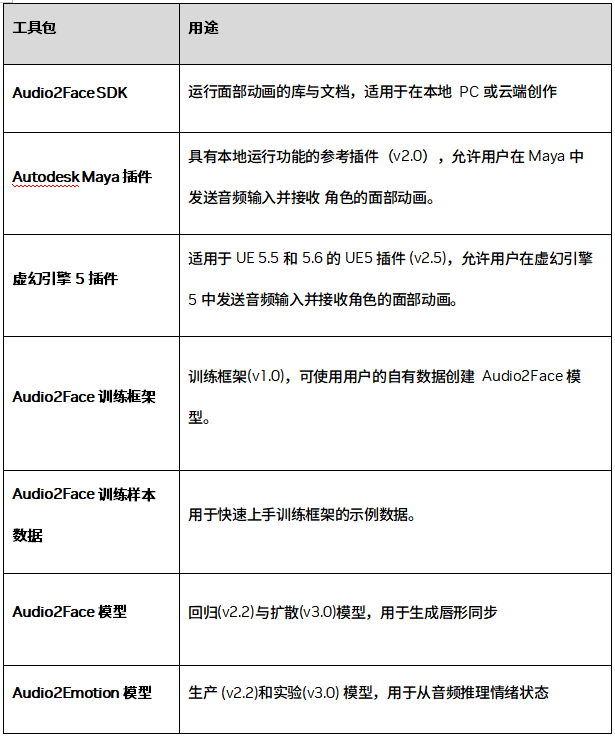

Here is a complete list of open source tools, and for more details, see NVIDIA ACE for game development.

About NVIDIA

NVIDIA (NASDAQ: NVDA) is a global leader in accelerating computing.